[the_ad id="21475"]

[ad_1]

In 2022, US chipmaker Nvidia released the H100, one of the most powerful processors it had ever built — and one of its most expensive, costing about $40,000 each. The launch seemed badly timed, just as businesses sought to cut spending amid rampant inflation.

Then in November, ChatGPT was launched.

“We went from a pretty tough year last year to an overnight turnround,” said Jensen Huang, Nvidia’s chief executive. OpenAI’s hit chatbot was an “aha moment”, he said. “It created instant demand.”

ChatGPT’s sudden popularity has triggered an arms race among the world’s leading tech companies and start-ups that are rushing to obtain the H100, which Huang describes as “the world’s first computer [chip] designed for generative AI”— artificial intelligence systems that can quickly create humanlike text, images and content.

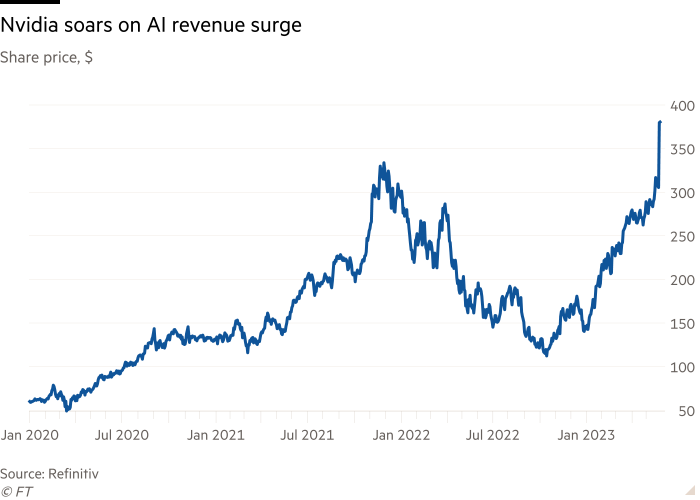

The value of having the right product at the right time became apparent this week. Nvidia announced on Wednesday that its sales for the three months ending in July would be $11bn, more than 50 per cent ahead of Wall Street’s previous estimates, driven by a revival in data centre spending by Big Tech and demand for its AI chips.

Investors’ response to the forecast added $184bn to Nvidia’s market capitalisation in a single day on Thursday, taking what was already the world’s most valuable chip company close to a $1tn valuation.

Nvidia is an early winner from the astronomical rise of generative AI, a technology that threatens to reshape industries, produce huge productivity gains and displace millions of jobs.

That technological leap is set to be accelerated by the H100, which is based on a new Nvidia chip architecture dubbed “Hopper” — named after the American programming pioneer Grace Hopper — and has suddenly became the hottest commodity in Silicon Valley.

“This whole thing took off just as we’re going into production on Hopper,” said Huang, adding that manufacturing at scale began just a few weeks before ChatGPT debuted.

Huang’s confidence on continued gains stems in part from being able to work with chip manufacturer TSMC to scale up H100 production to satisfy exploding demand from cloud providers such as Microsoft, Amazon and Google, internet groups such as Meta and corporate customers.

“This is among the most scarce engineering resources on the planet,” said Brannin McBee, chief strategy officer and founder of CoreWeave, an AI-focused cloud infrastructure start-up that was one of…

Click Here to Read the Full Original Article at UK homepage…

[ad_2]

[the_ad id="21476"]