Bellwether semiconductor company Nvidia is due to present earnings after markets close on Wednesday, with all of Wall Street eager to learn whether the AI bull market still has legs.

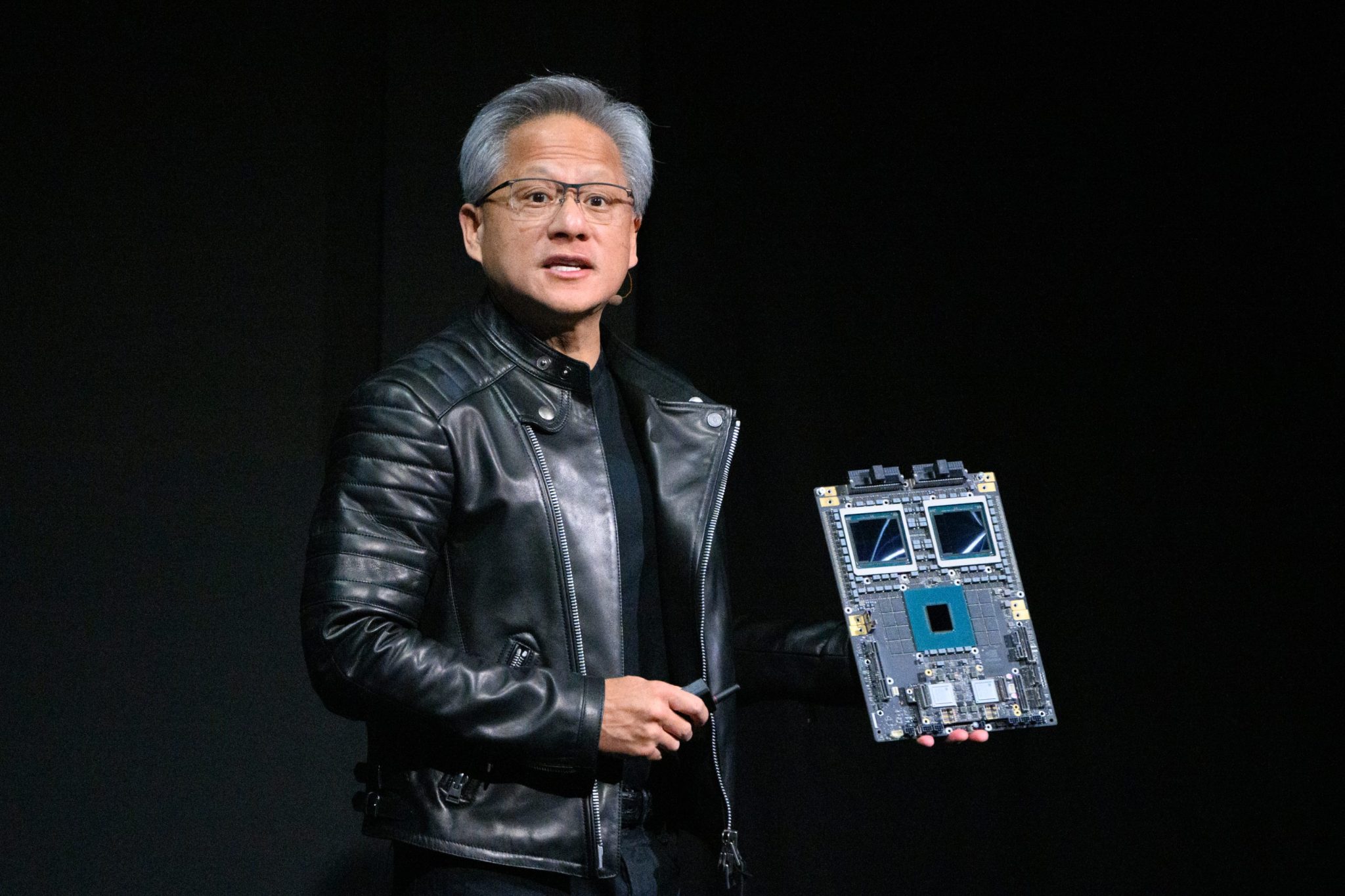

While founder Jensen Huang will feel the heat if he cannot deliver a bullish quarterly report, the pressure this time is not nearly as intense as in August, when seemingly the fate of the entire stock market hung on his every word after several big name tech companies disappointed. At the time Dan Ives of Wedbush Securities went so far as to call Nvidia’s results “the most important tech earnings in years.”

Yet Huang is still expected to set the tone for the industry moving forward as investors will be eager for clues on the health of the generative artificial intelligence boom. Nvidia has been a chief profiteer of the investment wave, since it supplies roughly 9 out of 10 AI training chips to data centers.

Its shares have tripled in value since the start of this year, while the tech-heavy Nasdaq Composite has only risen by a third over that same period.

“We expect another jaw dropper tomorrow from the Godfather of AI Jensen that will put jet fuel in this bull market engine,” wrote Ives on Tuesday, reaffirming his underlying investment thesis. In his view, Nvidia’s bombshell-proof market share effectively means it is the “only game in town” and can expect $1 trillion or more in capex spending from customers.

That doesn’t mean that the bar isn’t rising, especially as Nvidia continues to lap its easier comparison results from previous year’s quarters prior to the GenAI boom.

Worries over booming GenAI investments flattening out

There is a growing debate around the question as to whether advancements in neural networks are starting to plateau, with the pace of innovation dropping as the vast amounts of fresh data needed to train large language models are seemingly exhausted.

Meta’s chief AI scientist Yann Lecun, one of the original luminaries in the field, warned that simply attempting to scale the models through greater volumes of chips that are more powerful and can crunch even more data is not going to be enough—only a paradigm shift in approach will suffice.

“LLMs will *not* reach human-level intelligence,” he posted to Threads last week. “New architectures are needed.”

OpenAI’s recent product launch cadence has often been cited as an example. GPT-5 is still nowhere to be seen nearly two full…

Click Here to Read the Full Original Article at Fortune | FORTUNE…